Artificial Intelligence is advancing at a breathtaking pace, promising to revolutionize medicine, science, and industry. Yet, with every breakthrough, a shadow of concern grows longer. The very power that makes AI so transformative also makes it a potential source of significant harm. To charge ahead with development without a sober examination of the consequences is to be willfully blind. It is imperative that we pause and critically examine the key ethical concerns surrounding artificial intelligence development.

This is not about being anti-progress; it’s about being pro-responsibility. Building a future where AI benefits all of humanity requires us to confront these challenges head-on. Let’s navigate the complex landscape of AI ethics, exploring the most pressing issues that demand our attention, today.

1. Algorithmic Bias and Fairness: When AI Amplifies Inequality

Perhaps the most immediate and documented ethical concern is bias. The common saying “garbage in, garbage out” is profoundly true for AI. Machine learning models learn from data, and if that data reflects historical or social biases, the AI will not only learn them but can amplify them on a massive scale.

Real-World Examples:

- Hiring Tools: Amazon famously scrapped an AI recruiting tool because it penalized resumes that included the word “women’s” (as in “women’s chess club”) and showed a bias against female candidates. It had been trained on a decade of resumes submitted to the company, which were predominantly from men.

- Criminal Justice: Predictive policing algorithms and risk assessment tools used in courts have been shown to disproportionately flag minority individuals for future crime, often due to being trained on biased historical arrest data.

These are not mere glitches; they are systemic failures. The key ethical concerns surrounding artificial intelligence development here revolve around justice and fairness. How do we ensure our datasets are representative? How do we audit “black box” algorithms for discriminatory outcomes? Mitigating bias requires diverse teams of developers, rigorous testing for fairness, and ongoing monitoring. Failing to do so risks codifying discrimination into our digital infrastructure.

2. Privacy, Surveillance, and the Erosion of Anonymity

AI, particularly in the fields of computer vision and data analytics, has given governments and corporations unprecedented surveillance capabilities. The key ethical concerns surrounding artificial intelligence development in this domain strike at the heart of individual liberty.

- Facial Recognition: Widespread use by law enforcement and governments can lead to a surveillance state, chilling free speech and assembly. It also poses a massive threat to personal privacy, with the potential for constant, involuntary public identification.

- Data Harvesting: The business models of many tech companies are built on collecting vast amounts of personal data to train AI for targeted advertising. This creates detailed profiles of our behaviors, preferences, and even our mental states, often without our meaningful consent.

The ethical dilemma is the trade-off between security and efficiency versus personal privacy and autonomy. Where do we draw the line? Robust data protection laws (like GDPR), limits on the use of facial recognition, and a shift towards data minimization are critical steps in addressing this profound challenge.

3. Lack of Transparency and the “Black Box” Problem

Many of the most powerful AI systems, particularly deep learning networks, are “black boxes.” We can see the data that goes in and the results that come out, but the internal decision-making process is incredibly complex and opaque. This lack of explainability is a major ethical hurdle.

Why it Matters:

- If an AI model denies your loan application, you have a right to know why. Without an explanation, you cannot challenge a potentially erroneous or biased decision.

- If a self-driving car causes an accident, engineers need to understand the AI’s “thought process” to assign responsibility and prevent future tragedies.

- In medicine, a doctor cannot responsibly act on an AI’s diagnosis without understanding the reasoning behind it.

The key ethical concerns surrounding artificial intelligence development here are accountability and trust. How can we trust a system we cannot understand? The field of “Explainable AI (XAI)” is dedicated to solving this problem, striving to make AI decisions more interpretable to humans. Without transparency, AI systems cannot be held accountable, and public trust will erode.

4. Accountability and Legal Liability: Who is Responsible When AI Fails?

This concern flows directly from the “black box” problem. When an AI system causes harm—be it a fatal accident, a flawed medical diagnosis, or a discriminatory decision—who is to blame? Is it the developer who created the algorithm, the company that deployed it, the user who operated it, or the AI itself?

Our current legal frameworks are ill-equipped to handle this. You can’t sue an algorithm. The key ethical concerns surrounding artificial intelligence development demand that we establish clear chains of accountability. We need new laws and regulations that define liability for AI-related harms. This will require careful thought to avoid stifling innovation while ensuring that victims have recourse and that developers are incentivized to build safe and reliable systems.

5. Autonomous Weapons and the Future of Warfare

The development of AI-powered autonomous weapons systems, or “slaughterbots,” represents one of the most terrifying ethical frontiers. These are weapons that can identify, select, and engage targets without direct human control.

The key ethical concerns surrounding artificial intelligence development in warfare are existential. Delegating the decision to take a human life to a machine crosses a fundamental moral line. Can an algorithm reliably comply with International Humanitarian Law, which requires distinctions between combatants and civilians and judgments of proportionality? The risk of malfunctions, hacking, and escalating conflicts beyond human control is immense. A global treaty to ban or strictly regulate such weapons is a pressing moral imperative.

6. Job Displacement and Economic Inequality

As discussed in a previous blog, while AI will likely augment many jobs, it will also displace many others. The ethical concern is not just unemployment, but the potential for drastic economic inequality. If the profits from hyper-efficient AI systems flow primarily to a small group of owners and highly skilled workers, while a large segment of the population is left without viable employment, social unrest is inevitable.

Addressing this requires proactive policies: investing in education and reskilling, considering social safety nets like universal basic income (UBI), and fostering an economic model that shares the gains of AI automation broadly.

Navigating the Path Forward

The key ethical concerns surrounding artificial intelligence development are complex and intertwined. They cannot be solved by technologists alone. They require a multidisciplinary approach involving ethicists, sociologists, lawyers, policymakers, and the public.

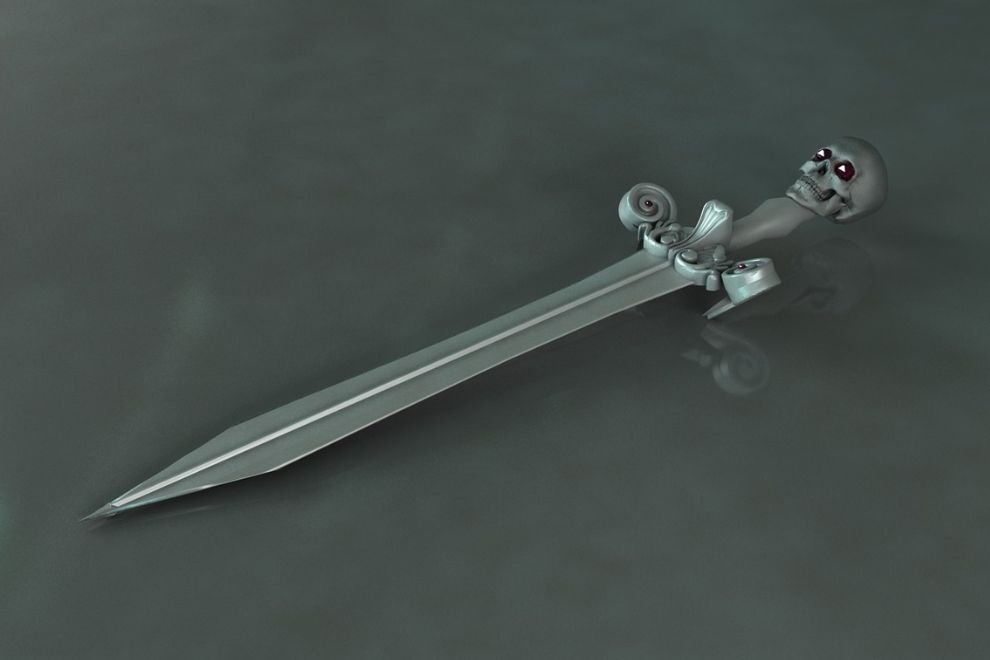

The goal is not to halt progress but to guide it. By prioritizing fairness, transparency, accountability, and human well-being, we can strive to sharpen one edge of the AI sword—the one that cuts a path toward a more prosperous and just future—while carefully sheathing the other. The choices we make today will determine whether AI becomes our greatest tool or our most formidable adversary.